Atom Feed Reader CDF

The web format of this guide reflects the most current release. Guides for older iterations are available in PDF format.

Integration Details

ThreatQuotient provides the following details for this integration:

| Current Integration Version | 1.0.0 |

| Compatible with ThreatQ Versions | >= 5.6.0 |

| Support Tier | ThreatQ Supported |

Introduction

The Atom Feed Reader CDF enables analysts to automatically ingest Atom feeds from multiple sources, directly into ThreatQ.

The integration provides the following feed:

- Atom Feed Reader - ingests Atom feeds, from multiple sources, into the ThreatQ platform.

The integration ingests the following system objects:

- Reports

- Indicators

Installation

Perform the following steps to install the integration:

The same steps can be used to upgrade the integration to a new version.

- Log into https://marketplace.threatq.com/.

- Locate and download the integration yaml file.

- Navigate to the integrations management page on your ThreatQ instance.

- Click on the Add New Integration button.

- Upload the integration yaml file using one of the following methods:

- Drag and drop the file into the dialog box

- Select Click to Browse to locate the file on your local machine

ThreatQ will inform you if the feed already exists on the platform and will require user confirmation before proceeding. ThreatQ will also inform you if the new version of the feed contains changes to the user configuration. The new user configurations will overwrite the existing ones for the feed and will require user confirmation before proceeding.

- The feed will be added to the integrations page. You will still need to configure and then enable the feed.

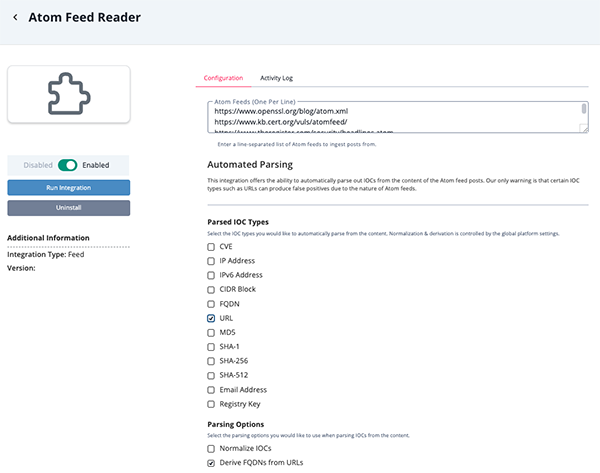

Configuration

ThreatQuotient does not issue API keys for third-party vendors. Contact the specific vendor to obtain API keys and other integration-related credentials.

To configure the integration:

- Navigate to your integrations management page in ThreatQ.

- Select the OSINT option from the Category dropdown (optional).

If you are installing the integration for the first time, it will be located under the Disabled tab.

- Click on the integration entry to open its details page.

- Enter the following parameters under the Configuration tab:

Parameter Description Atom Feeds Enter a line-separated list of Atom feeds to ingest data from. Parsed IOC Types Select the IOC types to automatically parse from the content. Options include: - CVE

- IP Address

- IPv6 Address

- CIDR Block

- FQDN

- URL

- MD5

- SHA-1

- SHA-256

- SHA-512

- Email Address

- Registry Key

Parsing Options Select the parsing options to use when parsing IOCs from content. Options include: - Normalize IOCs

- Derive FQDNs from URLs

- Review any additional settings, make any changes if needed, and click on Save.

- Click on the toggle switch, located above the Additional Information section, to enable it.

ThreatQ Mapping

Atom Feed Reader

The Atom Feed Reader feed pulls entries from one or more Atom feeds. Entries will be parsed and uploaded to ThreatQ as Reports.

GET https://www.kb.cert.org/vuls/atomfeed/

Sample Response:

<?xml version="1.0" encoding="utf-8"?>

<feed xml:lang="en-us" xmlns="http://www.w3.org/2005/Atom"><title>CERT Recently Published Vulnerability Notes</title><link href="https://kb.cert.org/vuls/" rel="alternate"/><link href="https://kb.cert.org/vuls/atomfeed/" rel="self"/><id>https://kb.cert.org/vuls/</id><updated>2024-04-18T18:47:30.440271+00:00</updated><author><name>CERT</name><email>cert@cert.org</email><uri>https://www.sei.cmu.edu</uri></author><subtitle>CERT publishes vulnerability advisories called "Vulnerability Notes." Vulnerability Notes include summaries, technical details, remediation information, and lists of affected vendors. Many vulnerability notes are the result of private coordination and disclosure efforts.</subtitle><entry><title>VU#253266: Keras 2 Lambda Layers Allow Arbitrary Code Injection in TensorFlow Models</title><link href="https://kb.cert.org/vuls/id/253266" rel="alternate"/><published>2024-04-16T20:16:27.223993+00:00</published><updated>2024-04-18T18:47:30.440271+00:00</updated><id>https://kb.cert.org/vuls/id/253266</id><summary type="html">

<h3 id="overview">Overview</h3>

<p>Lambda Layers in third party TensorFlow-based Keras models allow attackers to inject arbitrary code into versions built prior to Keras 2.13 that may then unsafely run with the same permissions as the running application. For example, an attacker could use this feature to trojanize a popular model, save it, and redistribute it, tainting the supply chain of dependent AI/ML applications. </p>

<h3 id="description">Description</h3>

<p>TensorFlow is a widely-used open-source software library for building machine learning and artificial intelligence applications. The Keras framework, implemented in Python, is a high-level interface to TensorFlow that provides a wide variety of features for the design, training, validation and packaging of ML models. Keras provides an API for building neural networks from building blocks called Layers. One such Layer type is a Lambda layer that allows a developer to add arbitrary Python code to a model in the form of a lambda function (an anonymous, unnamed function). Using the <code>Model.save()</code> or <code>save_model()</code> <a href="https://keras.io/api/models/model_saving_apis/model_saving_and_loading/#save_model-function">method</a>, a developer can then save a model that includes this code.</p>

<p>The Keras 2 documentation for the <code>Model.load_model()</code> <a href="https://keras.io/2.16/api/models/model_saving_apis/model_saving_and_loading/#load_model-function">method</a> describes a mechanism for disallowing the loading of a native version 3 Keras model (<code>.keras</code> file) that includes a Lambda layer when setting <code>safe_mode</code> (<a href="https://keras.io/api/models/model_saving_apis/model_saving_and_loading/#loadmodel-function">documentation</a>):</p>

<blockquote>

<p>safe_mode: Boolean, whether to disallow unsafe lambda deserialization. When safe_mode=False, loading an object has the potential to trigger arbitrary code execution. This argument is only applicable to the TF-Keras v3 model format. Defaults to True.</p>

</blockquote>

<p>This is the behavior of version 2.13 and later of the Keras API: an exception will be raised in a program that attempts to load a model with Lambda layers stored in version 3 of the format. This check, however, does not exist in the prior versions of the API. Nor is the check performed on models that have been stored using earlier versions of the Keras serialization format (i.e., v2 SavedModel, legacy H5).</p>

<p>This means systems incorporating older versions of the Keras code base prior to versions 2.13 may be susceptible to running arbitrary code when loading older versions of Tensorflow-based models.</p>

<h4 id="similarity-to-other-frameworks-with-code-injection-vulnerabilities">Similarity to other frameworks with code injection vulnerabilities</h4>

<p>The code injection vulnerability in the Keras 2 API is an example of a common security weakness in systems that provide a mechanism for packaging data together with code. For example, the security issues associated with the Pickle mechanism in the standard Python library are well documented, and arise because the Pickle format includes a mechanism for serializing code inline with its data. </p>

<h4 id="explicit-versus-implicit-security-policy">Explicit versus implicit security policy</h4>

<p>The TensorFlow security documentation at <a href="https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md)">https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md)</a> includes a specific warning about the fact that models are not just data, and makes a statement about the expectations of developers in the TensorFlow development community:</p>

<blockquote>

<p><strong>Since models are practically programs that TensorFlow executes, using untrusted models or graphs is equivalent to running untrusted code.</strong> (emphasis in <a href="https://github.com/tensorflow/tensorflow/blob/2fe6b745ea90276b17b28f30d076bc9447918fd7/SECURITY.md">earlier version</a>) </p>

</blockquote>

<p>The implications of that statement are not necessarily widely understood by all developers of TensorFlow-based systems.The last few years has seen rapid growth in the community of developers building AI/ML-based systems, and publishing pretrained models through community hubs like huggingface (<a href="https://huggingface.co/">https://huggingface.co/</a>) and kaggle (<a href="https://www.kaggle.com">https://www.kaggle.com</a>). It is not clear that all members of this new community understand the potential risk posed by a third-party model, and may (incorrectly) trust that a model loaded using a trusted library should only execute code that is included in that library. Moreover, a user may also assume that a pretrained model, once loaded, will only execute included code whose purpose is to compute a prediction and not exhibit any side effects outside of those required for those calculations (e.g., that a model will not include code to communicate with a network). </p>

<p>To the degree possible, AI/ML framework developers and model distributors should strive to align the explicit security policy and the corresponding implementation to be consistent with the implicit security policy implied by these assumptions.</p>

<h3 id="impact">Impact</h3>

<p>Loading third-party models built using Keras could result in arbitrary untrusted code running at the privilege level of the ML application environment.</p>

<h3 id="solution">Solution</h3>

<p>Upgrade to Keras 2.13 or later. When loading models, ensure the <code>safe_mode</code> parameter is not set to <code>False</code> (per <a href="https://keras.io/api/models/model_saving_apis/model_saving_and_loading">https://keras.io/api/models/model_saving_apis/model_saving_and_loading</a>, it is <code>True</code> by default). Note: An upgrade of Keras may require dependencies upgrade, learn more at https://keras.io/getting_started/</p>

<p>If running pre-2.13 applications in a <a href="https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md">sandbox</a>, ensure no assets of value are in scope of the running application to minimize potential for data exfiltration.</p>

<h4 id="advice-for-model-users">Advice for Model Users</h4>

<p>Model users should only use models developed and distributed by trusted sources, and should always verify the behavior of models before deployment. They should follow the same development and deployment best practices to applications that integrate ML models as they would to any application incorporating any third party component. Developers should upgrade to the latest versions of the Keras package practical (v2.13+ or v3.0+), and use version 3 of the Keras serialization format to both load third-party models and save any subsequent modifications.</p>

<h4 id="advice-for-model-aggregators">Advice for Model Aggregators</h4>

<p>Model aggregators should distribute models based on the latest, safe model formats when possible, and should incorporate scanning and introspection features to identify models that include unsafe-to-deserialize features and either to prevent them from being uploaded, or flag them so that model users can perform additional due diligence. </p>

<h4 id="advice-for-model-creators">Advice for Model Creators</h4>

<p>Model creators should upgrade to the latest versions of the Keras package (v2.13+ or v3.0+). They should avoid the use of unsafe-to-deserialize features in order to avoid the inadvertent introduction of security vulnerabilities, and to encourage the adoption of standards that are less susceptible to exploitation by malicious actors. Model creators should save models using the latest version of formats (Keras v3 in the case of the Keras package), and, when possible, give preference to formats that disallow the serialization of models that include arbitrary code (i.e., code that the user has not explicitly imported into the environment). Model developers should re-use third-party base models with care, only building on models from trusted sources.</p>

<h4 id="general-advice-for-framework-developers">General Advice for Framework Developers</h4>

<p>AI/ML-framework developers should avoid the use of naïve language-native serialization facilities (e.g., the Python <code>pickle</code> package has well-established security weaknesses, and should not be used in sensitive applications).</p>

<p>In cases where it's desirable to include a mechanism for embedding code, restrict the code that can be executed by, for example: </p>

<ul>

<li>disallow certain language features (e.g., <code>exec</code>)</li>

<li>explicitly allow only a "safe" language subset</li>

<li>provide a sandboxing mechanism (e.g., to prevent network access) to minimize potential threats.</li>

</ul>

<h3 id="acknowledgements">Acknowledgements</h3>

<p>This document was written by Jeffrey Havrilla, Allen Householder, Andrew Kompanek, and Ben Koo.</p>

</summary><content type="html">

<div class="row" id="content">

<div class="large-9 medium-9 columns">

<div class="blog-post">

<div class="row">

<div class="large-12 columns">

<h3 id="overview">Overview</h3>

<p>Lambda Layers in third party TensorFlow-based Keras models allow attackers to inject arbitrary code into versions built prior to Keras 2.13 that may then unsafely run with the same permissions as the running application. For example, an attacker could use this feature to trojanize a popular model, save it, and redistribute it, tainting the supply chain of dependent AI/ML applications. </p>

<h3 id="description">Description</h3>

<p>TensorFlow is a widely-used open-source software library for building machine learning and artificial intelligence applications. The Keras framework, implemented in Python, is a high-level interface to TensorFlow that provides a wide variety of features for the design, training, validation and packaging of ML models. Keras provides an API for building neural networks from building blocks called Layers. One such Layer type is a Lambda layer that allows a developer to add arbitrary Python code to a model in the form of a lambda function (an anonymous, unnamed function). Using the <code>Model.save()</code> or <code>save_model()</code> <a href="https://keras.io/api/models/model_saving_apis/model_saving_and_loading/#save_model-function">method</a>, a developer can then save a model that includes this code.</p>

<p>The Keras 2 documentation for the <code>Model.load_model()</code> <a href="https://keras.io/2.16/api/models/model_saving_apis/model_saving_and_loading/#load_model-function">method</a> describes a mechanism for disallowing the loading of a native version 3 Keras model (<code>.keras</code> file) that includes a Lambda layer when setting <code>safe_mode</code> (<a href="https://keras.io/api/models/model_saving_apis/model_saving_and_loading/#loadmodel-function">documentation</a>):</p>

<blockquote>

<p>safe_mode: Boolean, whether to disallow unsafe lambda deserialization. When safe_mode=False, loading an object has the potential to trigger arbitrary code execution. This argument is only applicable to the TF-Keras v3 model format. Defaults to True.</p>

</blockquote>

<p>This is the behavior of version 2.13 and later of the Keras API: an exception will be raised in a program that attempts to load a model with Lambda layers stored in version 3 of the format. This check, however, does not exist in the prior versions of the API. Nor is the check performed on models that have been stored using earlier versions of the Keras serialization format (i.e., v2 SavedModel, legacy H5).</p>

<p>This means systems incorporating older versions of the Keras code base prior to versions 2.13 may be susceptible to running arbitrary code when loading older versions of Tensorflow-based models.</p>

<h4 id="similarity-to-other-frameworks-with-code-injection-vulnerabilities">Similarity to other frameworks with code injection vulnerabilities</h4>

<p>The code injection vulnerability in the Keras 2 API is an example of a common security weakness in systems that provide a mechanism for packaging data together with code. For example, the security issues associated with the Pickle mechanism in the standard Python library are well documented, and arise because the Pickle format includes a mechanism for serializing code inline with its data. </p>

<h4 id="explicit-versus-implicit-security-policy">Explicit versus implicit security policy</h4>

<p>The TensorFlow security documentation at <a href="https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md)">https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md)</a> includes a specific warning about the fact that models are not just data, and makes a statement about the expectations of developers in the TensorFlow development community:</p>

<blockquote>

<p><strong>Since models are practically programs that TensorFlow executes, using untrusted models or graphs is equivalent to running untrusted code.</strong> (emphasis in <a href="https://github.com/tensorflow/tensorflow/blob/2fe6b745ea90276b17b28f30d076bc9447918fd7/SECURITY.md">earlier version</a>) </p>

</blockquote>

<p>The implications of that statement are not necessarily widely understood by all developers of TensorFlow-based systems.The last few years has seen rapid growth in the community of developers building AI/ML-based systems, and publishing pretrained models through community hubs like huggingface (<a href="https://huggingface.co/">https://huggingface.co/</a>) and kaggle (<a href="https://www.kaggle.com">https://www.kaggle.com</a>). It is not clear that all members of this new community understand the potential risk posed by a third-party model, and may (incorrectly) trust that a model loaded using a trusted library should only execute code that is included in that library. Moreover, a user may also assume that a pretrained model, once loaded, will only execute included code whose purpose is to compute a prediction and not exhibit any side effects outside of those required for those calculations (e.g., that a model will not include code to communicate with a network). </p>

<p>To the degree possible, AI/ML framework developers and model distributors should strive to align the explicit security policy and the corresponding implementation to be consistent with the implicit security policy implied by these assumptions.</p>

<h3 id="impact">Impact</h3>

<p>Loading third-party models built using Keras could result in arbitrary untrusted code running at the privilege level of the ML application environment.</p>

<h3 id="solution">Solution</h3>

<p>Upgrade to Keras 2.13 or later. When loading models, ensure the <code>safe_mode</code> parameter is not set to <code>False</code> (per <a href="https://keras.io/api/models/model_saving_apis/model_saving_and_loading">https://keras.io/api/models/model_saving_apis/model_saving_and_loading</a>, it is <code>True</code> by default). Note: An upgrade of Keras may require dependencies upgrade, learn more at https://keras.io/getting_started/</p>

<p>If running pre-2.13 applications in a <a href="https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md">sandbox</a>, ensure no assets of value are in scope of the running application to minimize potential for data exfiltration.</p>

<h4 id="advice-for-model-users">Advice for Model Users</h4>

<p>Model users should only use models developed and distributed by trusted sources, and should always verify the behavior of models before deployment. They should follow the same development and deployment best practices to applications that integrate ML models as they would to any application incorporating any third party component. Developers should upgrade to the latest versions of the Keras package practical (v2.13+ or v3.0+), and use version 3 of the Keras serialization format to both load third-party models and save any subsequent modifications.</p>

<h4 id="advice-for-model-aggregators">Advice for Model Aggregators</h4>

<p>Model aggregators should distribute models based on the latest, safe model formats when possible, and should incorporate scanning and introspection features to identify models that include unsafe-to-deserialize features and either to prevent them from being uploaded, or flag them so that model users can perform additional due diligence. </p>

<h4 id="advice-for-model-creators">Advice for Model Creators</h4>

<p>Model creators should upgrade to the latest versions of the Keras package (v2.13+ or v3.0+). They should avoid the use of unsafe-to-deserialize features in order to avoid the inadvertent introduction of security vulnerabilities, and to encourage the adoption of standards that are less susceptible to exploitation by malicious actors. Model creators should save models using the latest version of formats (Keras v3 in the case of the Keras package), and, when possible, give preference to formats that disallow the serialization of models that include arbitrary code (i.e., code that the user has not explicitly imported into the environment). Model developers should re-use third-party base models with care, only building on models from trusted sources.</p>

<h4 id="general-advice-for-framework-developers">General Advice for Framework Developers</h4>

<p>AI/ML-framework developers should avoid the use of naïve language-native serialization facilities (e.g., the Python <code>pickle</code> package has well-established security weaknesses, and should not be used in sensitive applications).</p>

<p>In cases where it's desirable to include a mechanism for embedding code, restrict the code that can be executed by, for example: </p>

<ul>

<li>disallow certain language features (e.g., <code>exec</code>)</li>

<li>explicitly allow only a "safe" language subset</li>

<li>provide a sandboxing mechanism (e.g., to prevent network access) to minimize potential threats.</li>

</ul>

<h3 id="acknowledgements">Acknowledgements</h3>

<p>This document was written by Jeffrey Havrilla, Allen Householder, Andrew Kompanek, and Ben Koo.</p>

</div>

</div>

<div class="row">

<div class="large-12 columns">

<h3> Vendor Information </h3>

<div id="vendorinfo">

One or more vendors are listed for this advisory. Please reference the full report for more information.

</div>

</div>

</div>

<br/>

<div class="row">

<div class="large-12 columns">

<h3> References </h3>

<ul>

<li><a href="https://keras.io/api/models/model_saving_apis/model_saving_and_loading/#loadmodel-function" class="vulreflink safereflink" target="_blank" rel="noopener">https://keras.io/api/models/model_saving_apis/model_saving_and_loading/#loadmodel-function</a></li>

<li><a href="https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md" class="vulreflink safereflink" target="_blank" rel="noopener">https://github.com/tensorflow/tensorflow/blob/master/SECURITY.md</a></li>

<li><a href="https://github.com/Azure/counterfit/wiki/Abusing-ML-model-file-formats-to-create-malware-on-AI-systems:-A-proof-of-concept" class="vulreflink safereflink" target="_blank" rel="noopener">https://github.com/Azure/counterfit/wiki/Abusing-ML-model-file-formats-to-create-malware-on-AI-systems:-A-proof-of-concept</a></li>

<li><a href="https://splint.gitbook.io/cyberblog/security-research/tensorflow-remote-code-execution-with-malicious-model" class="vulreflink safereflink" target="_blank" rel="noopener">https://splint.gitbook.io/cyberblog/security-research/tensorflow-remote-code-execution-with-malicious-model</a></li>

<li><a href="https://5stars217.github.io/2023-03-30-on-malicious-models/" class="vulreflink safereflink" target="_blank" rel="noopener">https://5stars217.github.io/2023-03-30-on-malicious-models/</a></li>

<li><a href="https://hiddenlayer.com/research/models-are-code/" class="vulreflink safereflink" target="_blank" rel="noopener">https://hiddenlayer.com/research/models-are-code/</a></li>

</ul>

</div>

</div>

<h3>Other Information</h3>

<div class="vulcontent">

<table class="unstriped">

<tbody>

<tr>

<td width="200"><b>CVE IDs:</b></td>

<td>

<a href="http://web.nvd.nist.gov/view/vuln/detail?vulnId=2024-3660">CVE-2024-3660 </a>

</td>

</tr>

<tr>

<td>

<b>Date Public:</b>

</td>

<td>2024-02-23</td>

</tr>

<tr>

<td><b>Date First Published:</b></td>

<td id="datefirstpublished">2024-04-16</td>

</tr>

<tr>

<td><b>Date Last Updated: </b></td>

<td>2024-04-18 18:47 UTC</td>

</tr>

<tr>

<td><b>Document Revision: </b></td>

<td>4 </td>

</tr>

</tbody>

</table>

</div>

</div>

</div>

<div class="large-3 medium-3 columns" data-sticky-container>

<div class="sticky" data-sticky data-anchor="content">

<div class="sidebar-links">

<ul class="menu vertical">

<li><a href="https://vuls.cert.org/confluence/display/VIN/Vulnerability+Note+Help" target="_blank" rel="noopener">About vulnerability notes</a></li>

<li><a href="mailto:cert@cert.org?Subject=VU%23253266 Feedback">Contact us about this vulnerability</a></li>

<li><a href="https://vuls.cert.org/confluence/display/VIN/Case+Handling#CaseHandling-Givingavendorstatusandstatement" target="_blank" >Provide a vendor statement</a></li>

</ul>

</div>

</div>

</div>

</div>

</content></entry><entry><title>VU#123335: Multiple programming languages fail to escape arguments properly in Microsoft Windows</title><link href="https://kb.cert.org/vuls/id/123335" rel="alternate"/><published>2024-04-10T15:13:48.856313+00:00</published><updated>2024-04-18T13:33:28.597485+00:00</updated><id>https://kb.cert.org/vuls/id/123335</id><summary type="html">

<h2 id="overview">Overview</h2>

<p>Various programming languages lack proper validation mechanisms for commands and in some cases also fail to escape arguments correctly when invoking commands within a Microsoft Windows environment. The command injection vulnerability in these programming languages, when running on Windows, allows attackers to execute arbitrary code disguised as arguments to the command. This vulnerability may also affect the application that executes commands without specifying the file extension.</p>

<h2 id="description">Description</h2>

<p>Programming languages typically provide a way to execute commands (for e.g., os/exec in Golang) on the operating system to facilitate interaction with the OS. Typically, the programming languages also allow for passing <code>arguments</code> which are considered data (or variables) for the command to be executed. The arguments themselves are expected to be not executable and the command is expected to be executed along with properly escaped arguments, as inputs to the command. Microsoft Windows typically processes these commands using a <code>CreateProcess</code> function that spawns a <code>cmd.exe</code> for execution of the command. Microsoft Windows has documented some of the concerns related to how these should be properly escaped before execution as early as 2011. See <a href="https://learn.microsoft.com/en-us/archive/blogs/twistylittlepassagesallalike/everyone-quotes-command-line-arguments-the-wrong-way">https://learn.microsoft.com/en-us/archive/blogs/twistylittlepassagesallalike/everyone-quotes-command-line-arguments-the-wrong-way</a>. </p>

<p>A vulnerability was discovered in the way multiple programming languages fail to properly escape the arguments in a Microsoft Windows command execution environment. This can lead confusion at execution time where an expected argument for a command could be executed as another command itself. An attacker with knowledge of the programming language can carefully craft inputs that will be processed by the compiled program as commands. This unexpected behavior is due to lack of neutralization of arguments by the programming language (or its command execution module) that initiates a Windows execution environment. The researcher has found multiple programming languages, and their command execution modules fail to perform such sanitization and/or validation before processing these in their runtime environment. </p>

<h2 id="impact">Impact</h2>

<p>Successful exploitation of this vulnerability permits an attacker to execute arbitrary commands. The complete impact of this vulnerability depends on the implementation that uses a vulnerable programming language or such a vulnerable module.</p>

<h2 id="solution">Solution</h2>

<h4 id="updating-the-runtime-environment">Updating the runtime environment</h4>

<p>Please visit the Vendor Information section so see if your programming language Vendor has released the patch for this vulnerability and update the runtime environment that can prevent abuse of this vulnerability. </p>

<h4 id="update-the-programs-and-escape-manually">Update the programs and escape manually</h4>

<p>If the runtime of your application doesn't provide a patch for this vulnerability and you want to execute batch files with user-controlled arguments, you will need to perform the escaping and neutralization of the data to prevent any intended command execution. </p>

<p>Security researcher has more detailed information in the <a href="https://flatt.tech/research/posts/batbadbut-you-cant-securely-execute-commands-on-windows/">blog post</a> which provides details on specific languages that were identified and their Status. </p>

<h2 id="acknowledgements">Acknowledgements</h2>

<p>Thanks to the reporter, <a href="https://flatt.tech/research/posts/batbadbut-you-cant-securely-execute-commands-on-windows/">RyotaK</a>.This document was written by Timur Snoke.</p>

</summary><content type="html">ThreatQuotient provides the following default mapping for this feed:

| Feed Data Path | ThreatQ Entity | ThreatQ Object Type or Attribute Key | Published Date | Examples | Notes | |

|---|---|---|---|---|---|---|

.entry[].title, .entry[].title['#text'] |

Report.Value | N/A | N/A | .updated |

[Bug 15103] CVE-2022-1615 [SECURITY] GnuTLS gnutls_rnd() can fail and give predictable random values |

N/A |

.entry[].content, .entry[].content['#text'] |

Report.Description | N/A | N/A | N/A | <HTML> |

N/A |

.entry[].summary, .entry[].summary['#text'] |

Report.Description | N/A | N/A | N/A | <HTML> |

N/A |

.entry[].author.name |

Report.Attribute | Author | N/A | .updated |

OpenSSL Foundation, Inc. |

N/A |

.entry[].author.name |

Report.Source | N/A | N/A | N/A | OpenSSL Foundation, Inc. |

N/A |

| N/A | Report.Source | N/A | N/A | N/A | Atom Feed Reader |

Source applied by default |

.entry[].link['#href'] |

Report.Attribute | External Reference | N/A | .updated |

N/A | N/A |

.entry[].id |

Report.Attribute | ID | N/A | .updated |

N/A | Only ingested if different from the link['#href'] field. |

<Entry Title/Content> |

Report.Indicator | CVE | N/A | .updated |

N/A | Entries are parsed for CVE IDs. |

Average Feed Run

Object counts and Feed runtime are supplied as generalities only - objects returned by a provider can differ based on credential configurations and Feed runtime may vary based on system resources and load.

| Metric | Result |

|---|---|

| Run Time | 1 minute |

| Reports | 90 |

| Report Attributes | 200 |

| Indicators | 69 |

Known Issues / Limitations

- The feed utilizes since and until dates to make sure entries are not re-ingested if they haven't been updated. If you need to ingest historical posts, run the feed manually by setting the since date back.

Change Log

- Version 1.0.0

- Initial release

PDF Guides

| Document | ThreatQ Version |

|---|---|

| Atom Feed Reader CDF Guide v1.0.0 | 5.6.0 or Greater |