ThreatQ Pynoceros documentation¶

Configuration Driven Feeds¶

The Pynoceros codebase is responsible for handling feed execution and parsing. Configuration Driven Feeds, (CDFs), allow a user to build powerful and robust definitions of how to ingest Threat Intelligence from a Feed provider. This section provides a general overview of writing CDF definitions and documents the options available when writing CDF definitions.

Feed Runs¶

This section provides an overview of different Feed Run types that are available to users running Configuration Driven Feeds.

All Feed Runs have an associated time range to query. The start and end datetime values for the Feed Run’s query range

are known as the since and until dates within CDFs. These values are available for use within a definition via

Run Meta.

Scheduled Runs¶

Scheduled Runs are a Feed’s primary means of ingesting data and are scheduled and executed via the dynamo process.

The query time range for Scheduled Runs is based on a Feed’s last_import_at and configured period (aka

frequency), or schedule. The schedule is a limited CRON string that can be used for advanced scheduling.

The first time a Feed runs, its time range is set as:

since- The current time minus the Feed’speriodorscheduleinterval.until- The current time

When a Scheduled Run completes successfully, the Feed’s last_import_at is updated to the completed Run’s until

datetime. The next Scheduled Run is then scheduled for the previous run’s until time plus the Feed’s period,

or schedule interval.

From then on, Scheduled Run query ranges are set as:

since- The Feed’slast_import_atuntil- The Feed’slast_import_atplus the Feed’speriod, orscheduleinterval.

If a Scheduled Run encounters an error or fails for some reason, the Feed’s last_import_at is not updated. If a

Feed’s last_import_at falls behind due to large data throughput, Feed Run errors, the Feed being disabled, or any

other reason, the Feed will perform consecutive Scheduled Runs until it catches back up to the current time.

For any given Feed, only one Scheduled Run may be in progress at a time.

Note

Starting in ThreatQ Version 5.3.0, first run behavior for CRON-scheduled feeds is determined by the schedule

entirely. If a schedule is configured as 30 15 * * *, the feed will not run until the 30th minute of the 15th hour,

System Time (UTC) regardless of last_import_at.

Note

The database will store BOTH a CRON-Schedule AND the Frequency, but the CRON-Schedule takes precidence, and if

it is not None for a given feed, will ignore the frequency.

Manual Runs¶

Manual Runs are on-demand Feed Runs triggered via the ThreatQ UI or CLI. Manual Runs require a since time and accept

an optional until time. Since Manual Runs require at least a since time, only Feeds that leverage a since

or until value within their definition’s source support Manual Runs.

For any given Feed, only one Manual Run may be in progress at a time.

One can trigger a Manual Run for an installed, enabled, and configured Feed by using the following artisan command via the CLI:

sudo php /var/www/api/artisan threatq:feeds-manual --since <DateTime> --until <DateTime> <Feed Name>

# The format for <DateTime> is "YYYY-MM-DD HH:mm:ss" (e.g. "2020-02-19 23:24:00")

Note

For any given Feed, both a Scheduled Run and a Manual Run can be in progress at the same time.

Feed Definitions¶

A CDF definition file is written in the YAML data serialization language. For more YAML information and usage examples, see Basic YAML Usage.

CDF Feed Definitions also make extensive use of the Jinja2 templating language. See Jinja2 Templating in CDF for more information.

For more information on how to write a feed definition and the purpose of each of its parts, see Writing a Feed Definition.

Basic YAML Usage¶

Overview¶

A CDF definition file is written in the YAML data serialization language. YAML is a superset of JSON, supporting all of the possible data forms allowed within standard JSON data and more. This page lays out common YAML constructs one will encounter while writing a Feed Definition.

Note

YAML comments are denoted by a #. Within this section, commented lines in YAML examples provide

more contextual information.

Basic YAML Structure¶

YAML utilizes indentation to specify scoping of object data. The default indentation size for YAML is two spaces.

Primitive Data Types (Scalars)¶

YAML supports all the standard primitive data types one would expect from a data markup language, namely:

String

Integer

Floating Point

Boolean

Null

The following illustrates the use of various primitive data types within YAML:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | Hello There # String

'Hello There' # String

"Hello There" # String

42 # Int

20.21 # Floating Point

True # Boolean

False # Boolean

null # Null

|

This is a

multiline string

# Result: "This is a\nmultiline string\n"

|-

This is a

multiline string with

the trailing newline removed

# Result: "This is a\nmultiline string with\nthe trailing newline removed"

|

Warning

In the context of Pynoceros CDF, data does not require explicit quotations to be read as a String. Quotations are required in a few “Gotcha” cases, however:

String starting with certain reserved symbols, like

%and!An empty string (

'')Regex strings

Basic Data Structures (Collections)¶

The following data structures can be used anywhere within a YAML file.

List/Array¶

Lists (or arrays) are denoted in YAML via a - prior to a data value. When supplying elements to a list via

the - syntax, element declarations should be indented once (two spaces only) beneath the list object declaration. Like

JSON, YAML lists can contain a mix of multiple data types. The following illustrates the use of a YAML list

called example_list that has two elements:

1 2 3 | example_list:

- Hello There

- 42

|

Dictionary/Mapping¶

Dictionary mapping of key/value pairs. A Key is expected to be a String (Integers are implicitly cast to a String),

while a Value can be any supported YAML value: a scalar (primitive data value) or collection (list or dictionary). The

following illustrates a complex YAML dictionary called example_dict that uses a variety of YAML types:

1 2 3 4 5 6 7 8 9 10 11 | example_dict:

id: 99

may_be_provided: null

description: This is an example!

values:

- 1

- 2

- 3

- color: Blue

- nested_dict:

is_nested: True

|

Warning

Sometimes YAML needs a little help determining what data structure you are intending to create,

particularly when intermixing dictionaries and lists. Note that the nested-dict mapping under values``has

its ``is-nested attribute indented twice (four spaces instead of the usual two).

The previous example is directly equivalent to the following JSON:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | {

"example_dict": {

"values": [

1,

2,

3,

{

"color": "Blue"

},

{

"nested_dict": {

"is_nested": true

}

}

],

"id": 99,

"may_be_provided": null,

"description": "This is an example!"

}

}

|

Custom YAML Declarations¶

YAML allows one to declare arbitrary data structures for use anywhere within the YAML file by simple reference. When referenced, the reference is replaced with the content of the custom declaration. The following illustrates creating custom declarations and referencing said declarations within the YAML file:

1 2 3 4 5 6 7 8 9 10 11 | _anchors:

- &custom1 # Here, &custom1 denotes the name of this custom declaration

test_value_A: 1

test_value_B: 2

- &custom2 # Here, &custom2 denotes the name of this custom declaration

test_value_C: 3

example:

is_true: True

<<: [*custom1, *custom2] # Referencing custom declarations

is_false: False

|

The previous example written without the custom declarations would look like:

1 2 3 4 5 6 | example:

is_true: True

test_value_A: 1

test_value_B: 2

test_value_C: 3

is_false: False

|

The final resulting YAML from either example would be equivalent. Utilizing custom YAML declarations allows a Feed Definition writer to reduce repeated logic and create more modular definitions.

Note

Custom YAML declarations cannot be used to extend a list.

Jinja2 Templating in CDF¶

Overview¶

Jinja2 is a powerful templating language written for Python. CDF Definitions provide an interface for manipulating and accessing data via Jinja2 Expressions and Templates.

Leveraging Jinja2 in Feed Definitions¶

By default, CDF Definitions read YAML values as primitive data types. To indicate that a YAML value should be interpreted as a Jinja2 Expression or Template, one must insert a YAML tag before the value.

!expr- Read value as Jinja2 Expression!tmpl- Read value as Jinja2 Template

The following illustrates these tags in the context of a CDF Filter Chain:

1 2 3 4 5 6 | filters:

- get: resources

- iterate

- new:

id: !expr 'None' if not value.id else value.id

display: !tmpl 'Value of A is {{value.A}} and Value of B is {{value.B}}'

|

Jinja2 Expressions¶

Jinja2 Expressions allow one to specify Python-like expressions that act upon data passed into them via keyword arguments. Jinja2 Expressions can be used to implement conditional transformation logic, string concatenation, and numerous other functionalities. The following illustrates some common expression constructs as if they were snippets of a CDF Filter Chain:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 | # =========================

# String Concatenation

# =========================

- new: Test!

- new: !expr '"This is a " ~ value'

# Result: "This is a Test!"

# Jinja2 Templates should be preferred over Jinja2 Expressions for simple string concatenation.

# For example, the previous snippet could be rewritten as:

- new: Test!

- new: !tmpl 'This is a {{value}}'

# However, in use cases in which string concatenation is part of a more complex expression,

# Jinja2 Expressions must be used. For example:

- new:

some_mapping:

key_abc: 2

key_def: 42

key_ghi: 9

lookup_key: def

- if:

condition: !expr '"key_" ~ value.lookup_key in value.some_mapping'

filters:

- set:

lookup_value: !expr 'value.some_mapping["key_" ~ value.lookup_key]'

- set-default:

lookup_value: !expr None

- get: lookup_value

# Result: 42

# =========================

# Conditional Logic

# =========================

- new:

num: 28

- new: !expr '"Even" if value.num % 2 == 0 else "Odd"'

# Result: "Even"

- new:

num: 27

- new: !expr '"Even" if value.num % 2 == 0 else "Odd"'

# Result: "Odd"

# =========================================

# Python-like Operations & List Creation

# =========================================

- new: !expr '["# This is a comment", 1, 2, 3]'

- iterate

- str

- drop: !expr value.startswith('#')

- new: !expr value|int ** value|int # Uses the Jinja2 `int` filter

# Yields: 1, 4, 27

# Jinja2 Expressions are not required for creating lists. The above, however,

# looks better than the following:

- new:

-

- '# This is a comment'

- 1

- 2

- 3

# A list of lists has to be used here since the `new` filter unpacks an iterable into

# its args. This is not necessary when using a Jinja2 Expression because the Expression

# itself is a single argument. Regardless, the above can be somewhat beautified without

# using a Jinja2 Expression by writing out the list using JSON syntax, which is supported

# given that YAML is a superset of JSON.

- new:

- ['# This is a comment', 1, 2, 3]

# This looks just like the line in the example that uses the Jinja2 Expression, except without

# the !expr tag and the list isn't wrapped in quotes. For most cases, this is fine, unless

# there is a list item that is an expression. Then a Jinja2 Expression is required:

- new: !expr '["# This is a comment" ~ "!", 1 ** 1, 2 ** 2, 3 ** 3]'

# Result: ["# This is a comment!", 1, 4, 27]

|

Note

String concatenation within Jinja2 Expressions utilizes the ~ character rather than the classic +

symbol.

Note

Jinja2 Expressions in the context of CDF Definitions do not need to be wrapped entirely in quotes - only a specific string chunk used within an Expression needs to be quoted.

Warning

Jinja2 Expressions do not support list comprehensions or looping.

For more information on Jinja2 Expressions including tests and filters built into Jinja2, see Jinja2’s API Documentation.

Jinja2 Templates¶

Jinja2 Templates allow one to easily create formatted strings more concisely than with Jinja2 Expressions. The following illustrates how to utilize Jinja2 Templates to create formatted strings as if they were snippets of a CDF Filter Chain:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 | - new:

A: 1

B: 2

- new: !tmpl 'A = {{value.A}} and B = {{value.B}}'

# Result: "A = 1 and B = 2"

- new:

A: True

B: False

- new: !tmpl 'A = {{value.A}} and B = {{value.B}}'

# Result: "A = True and B = False"

- new:

A: !expr '"This is a " ~ "Test!"'

B: !expr 2 ** 2

- set:

C: !expr value.A.startswith('This')

- new: !tmpl 'A = {{value.A}} and B = {{value.B}} and C = {{value.C}}'

# Result: "A = This is a Test! and B = 4 and C = True"

# Templates can include inline expressions:

- new:

num: 27

- new: !tmpl '{{value.num}} is {{"Even" if value.num % 2 == 0 else "Odd"}}.'

# Result: "27 is Odd."

# Templates can be multiline and use Jinja2 Control Structures and Whitespace Control:

- new:

vulnerable_browsers:

- Firefox

- Brave

- Netscape

- set:

description: !tmpl |

{% if value.vulnerable_browsers -%}

<h2>Vulnerable Browsers</h2><ul>

{%- for browser in value.vulnerable_browsers -%}

<li>{{browser}}</li>

{%- endfor %}</ul>

{%- endif %}

{%- if value.vulnerable_vendors %}

<h2>This Won't Render</h2>

{% endif %}

# Result:

# {

# "description": "<h2>Vulnerable Browsers</h2><ul><li>Firefox</li><li>Brave</li><li>Netscape</li></ul>",

# "vulnerable_browsers": [

# "Firefox",

# "Brave",

# "Netscape"

# ]

# }

|

For more information on Jinja2 Templates including tests and filters built into Jinja2, see Jinja2’s API Documentation.

Pynoceros Jinja2 Extension¶

The Pynoceros SDK used by Dynamo’s CDF runtime contains a Jinja2 Extension which exposes the following Jinja2 Filters:

to_timestamp- New in version 4.34.0. This filter passes its input to the arrow module’sget(), returning anArrowobject. The following illustrates some example use cases for this Jinja2 Filter used within Jinja2 Expressions:# Subtract 5 minutes (300 seconds) from when the feed run started and display the resultant # timestamp in the format `YYYY-MM-DDTHH:MM:SSZ`: - new: !expr (((run_meta.since|to_timestamp).format('X')|int - 300)|to_timestamp).strftime('%FT%H:%M:%SZ') # Example Result: "2020-11-15T19:36:52Z" # Convert a timestamp from one format to another: - new: published: '05/20/2020 05:20:20 AM' - set: published: !expr value.published | to_timestamp('M/D/YYYY h:mm:ss A') - filter-mapping: published: timestamp # There is no way to provide an input timestamp format, so to_timestamp is used for that purpose # Result: {"published": "2020-05-20 05:20:20-00:00"}

Note

Pynoceros is currently dependent on arrow 0.8.0. As a result, the

Arrowobject returned from this filter will not have newer convenient methods likeshift(). Therefore, doing relative datetime manipulations is a bit more tedious today, as can be seen in one of the above examples.Warning

Arrowobjects are not JSON serializable. If one is using tq-feed run to test a Feed Definition’s Filter Chain and has the following at the end of the Filter Chain:- new: published: '05/20/2020 05:20:20 AM' - set: published: !expr value.published | to_timestamp('M/D/YYYY h:mm:ss A')

One will see the error:

TypeError: <Arrow [2020-05-20T05:20:20+00:00]> is not JSON serializable. To avoid this, one needs to convert theArrowobject into a JSON primitive, similar to what is done in the above use case examples by using the filter-mapping and timestamp filters.

Jinja2 Caveats¶

Jinja2’s dot syntax can only be used to access valid Python identifiers: all characters alphanumeric or underscores, not starting with a number. Keys that do not conform to standard Python indentifiers, such as

meta-category, can instead be referenced with the direct dictionary access syntax. For instance, the expression!expr value.meta-categorywould be read by Jinja2 asvalue.meta - category, rather than selecting on the keymeta-category. The correct expression to reference this value is!expr value["meta-category"].Warning

Some keys (such as ‘items’) are reserved in CDFs and cannot be used with dot notation. It’s recommended to not use these keys at all, but if you must, accessing it as

!expr value["items"]will work.

Writing a Feed Definition¶

Overview¶

The basic setup of a CDF Definition is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | version: '1.1.1'

required_threatq_version: '>=4.34.0'

template_values:

...

feeds:

Feed Name ABC:

category: Commercial

default_period: 86400

display_name: ABC

namespace: threatq.feeds.FeedNameABC

description: "This is Feed ABC"

default_indicator_status: Active

default_signature_status: Active

timestamp_format: '%s'

user_fields:

...

source:

...

filters:

...

report:

...

Feed Name XYZ:

category: Commercial

default_period: 3600

namespace: threatq.feeds.FeedNameXYZ

default_indicator_status: Review

timestamp_format: '%Y-%m-%d %H:%M:%S'

user_fields:

...

source:

...

filters:

...

report:

...

|

This section covers all keys in the root scope of a Feed Definition and most keys in a given feed dictionary mapping under

feeds. A feed’s Source (source), Filters (filters), and

Reporting (report) keys are much more involved and are covered in their own sections.

Feed Definition Version¶

The version of a Feed Definition is provided via the version key in the root scope of a Feed Definition. All primary

feeds in the Feed Definition are versioned based on the version value.

It is highly advised to follow Semantic Versioning.

Warning

Explicitly wrap the version value in quotes so that the YAML parser does not attempt to interpret it

as a floating point value.

Feed Definition Requirements¶

Based on changes in the Pynoceros SDK, Dynamo, the ThreatQ API, etc., a Feed Definition may be constrained to specific ThreatQ versions (the aforementioned ThreatQ components are typically all versioned to match the ThreatQ version). Attempting to install a Feed Definition that uses a feature that is not known to any of the aforementioned components may result in failing to install the feed or failing to successfully run the feed.

The required_threatq_version key in the root scope of a Feed Definition provides a means of guarding against

installing the Feed Definition on a ThreatQ box that is incapable of understanding or running it. The required_threatq_version

value is a version specifier modeled after PEP 440.

Note

A version specifier contains constraints. A constraint is in the format {{operator}}{{version}}, such as

>=4.34.0. There can be whitespace between the operator and the version in the constraint. Any leading or trailing

whitespace in an individual constraint is trimmed.

The currently supported operators are:

==!=<<=>=>

A version specifier can have multiple constraints separated by commas that are implicitly joined by ANDs, meaning

that the ThreatQ version must satisfy all the constraints. For example, if a Feed Definition can be supported by at

least ThreatQ version 4.34.0 but cannot be supported by ThreatQ version 4.44.0 for some reason, one can provide the

required_threatq_version value: >=4.34.0, !=4.44.0.

Most Feed Definitions are only constrained by a minimum ThreatQ version; therefore, most version specifiers are in the format:

>=x.y.z.

The required_threatq_version is only checked by the ThreatQ API at Feed Definition installation time. It is not checked by Dynamo

before loading or running a Feed Definition.

Template Values¶

A Feed Definition writer often wants to reference some hard-coded static data in several locations within the

Feed Definition or lookup/resolve values in a global reference dictionary mapping. The optional template_values

dictionary mapping, located at the root of a Feed Definition, provides an easy mechanism for declaring this information.

The following demonstrates an example template_values declaration:

1 2 3 4 5 | template_values:

common_string: This is used often

lookup_table:

keyA: A value to insert

keyB: Another value

|

Once declared, these template_values can be accessed anywhere in the Feed Definition via

Jinja2 Expressions or Templates. For instance, a Feed Definition writer can reference

the above template_values like so:

1 2 3 4 5 6 7 8 9 10 11 12 13 | filters:

- new:

common: !tmpl '{{common_string}} to dance!'

needle: keyA

- set:

found: !expr lookup_table[value.needle]

# Result:

# {

# "common": "This is used often to dance!",

# "found": "A value to insert",

# "needle": "keyA"

# }

|

Warning

The following key names should not be used as Template Values as they are reserved and are overwritten during parsing:

data(reserved withinreport)run_meta(see Feed Run Metadata)run_paramsrun_varsuser_fields(see User Fields)response(see User Fields)value(reserved withinfilters)

Note

Jinja2 Expressions or Templates cannot be used within template_values.

Category¶

When looking at the “My Integrations” page on the ThreatQ UI, one will see that Feeds have categories that can be filtered on. The two default categories are:

OSINT(Open-source intelligence)Commercial

The category key is used to set the category for a feed:

1 2 3 4 5 6 7 | feeds:

Example OSINT Feed:

category: OSINT

...

Example Commercial Feed:

category: Commercial

...

|

Note

category values are case-sensitive. Feed installation may fail if capitalization is incorrect.

Note

If no category is explicitly set, the feed will default to using the Labs category.

Note

While not explicitly enforced, the STIX/TAXII category should be reserved for TAXII feeds that are

dynamically created via the ThreatQ UI (“My Integrations” > “Add New Integrations” > “Add New TAXII Feed”).

If one is creating a Feed Definition that utilizes the TAXII Source and/or the

parse-stix filter, one should stick to using either the OSINT or Commercial

categories.

Default Period¶

When specified, the Default Period value (in seconds) is honored as the Feed’s run period on install. Currently, the ThreatQ UI supports a few frequency options: 3600 (1 Hour), 21600 (6 Hours), 86400 (1 Day), 172800 (2 Days), 1209600 (14 days), and 2592000 (30 Days).

The default_period key is used to set the default period for a feed:

1 2 3 | feeds:

Example Feed:

default_period: 3600

|

Note

The default value for default_period is 86400, or a daily period.

Note

The default_period is only honored on install. Upgrades will not reset an installed Feed’s period

back to the default_period.

Note

While the ThreatQ UI only supports daily and hourly period choices, the feed itself can specify any

integer value for default_period and have it honored.

New in version 5.3.0.

Default Schedule¶

When specified, the Default Schedule value (in modified CRON) is honored as the Feed’s run schedule on install. Currently, the ThreatQ UI supports Daily and Weekly configurations.

The default_schedule key is used to set the default schedule for a feed:

1 2 3 | feeds:

Example Feed:

default_schedule: "30 13 * * *"

|

Note

The default value for default_schedule is None.

Note

The default_schedule is only honored on install. Upgrades will not reset an installed Feed’s

schedule back to the default_schedule.

Note

While the ThreatQ UI only supports daily and weekly schedule choices, the Yaml itself can specify any valid CRON-Like schedule and have it honored, which is validated on upload.

Note

We do not support ? or W type syntax. We do support H syntax. For more detailed information

about what CRON we support, please reference the helpcenter Docs. Alternatively, you may use our command line

utility, tq-feed analyze for validation.

New in version 4.42.0.

Display Name¶

A feed’s display_name is the identifier string shown on the presentation layer.

A display_name can be any arbitrary string up to 255 characters. For example:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | feeds:

PhishLabs:

feed_type: primary

namespace: threatq.connector.commercial.phishlabs.lab

description: "Retrieves data at PhishLabs"

...

Phish Labs Intelligence Echo:

feed_type: primary

display_name: PhishLabs

namespace: threatq.connector.commercial.phishlabs.echo

description: "Retrieves Echo data at PhishLabs"

...

Phish Labs Intelligence Golf:

feed_type: primary

display_name: PhishLabs

namespace: threatq.connector.commercial.phishlabs.golf

description: "Retrieves Golf data at PhishLabs"

...

|

Note

The default value for display_name is the feed’s name when the display_name key is not stated.

Note

One can use display_name to update a single integration using multiple sources of data.

New in version 5.8.0.

Namespace¶

A feed’s Namespace is a unique identifier, often used in place of the feed’s human-readable name for identification

purposes. A Namespace can be any arbitrary identifier, but they often follow the convention

threatq.connector.[category].[vendor].[identifier]. For example:

1 2 3 4 5 6 7 8 9 10 11 12 13 | feeds:

Phishlabs:

category: Commercial

namespace: threatq.connector.commercial.phishlabs.PhishLabs

...

www.dan.me.uk Toe Node List:

category: OSINT

namespace: threatq.connector.osint.www_dan_me_ul_torlist.WwwDanMeUkTorList

...

Bambenek Consulting - C2 IP:

category: OSINT

namespace: threatq.connector.osint.bambenek.C2IP

...

|

Note

If no namespace is provided, the feed’s namespace will default to threatq.connector.[feed_name], where

feed_name is the unmodified feed display name. Note that this may not follow convention, since feed names may

contain spaces (whereas namespaces typically do not).

Description¶

A feed’s Description explains what a feed does. A Description can be any arbitrary string up to 255 characters. For example:

1 2 3 4 5 6 | feeds:

Phishlabs:

category: Commercial

namespace: threatq.connector.commercial.phishlabs.PhishLabs

description: "Retrieves data at PhishLabs and does stuff."

...

|

Note

If no description is provided, the feed’s description will default to a blank string.

Ingest Rules¶

Ingest Rules is a mapping that contains data ingestion rules for the feed. At the time of writing, the only supported ingest rule is for Attributes.

On feed upload, these configurations are used for setting an ingestion rule in the platform. If an attribute key is not specified in the rules, it will be assumed to be a multi-value attribute.

If an attribute key is specified within the config, its default rule insert will be overwritten for the attribute

for that source, if provided.

If a source is not provided on the ingest_rules and if the feed type is not action, the source will default to the feed’s name.

This means that if an attribute is attempted to be ingested more than once, but an entry exists in the ingest_rules,

and rule: insert the new attribute value will overwrite the existing value in ThreatQ instead of adding a new

attribute key/value pair.

If an attribute is not specified in the ingest_rules, a new key/value pair will be ingested alongside the existing ones.

The name and rule keys are required. The sources key is also required when the feed_type is action. If they are not specified, the feed will fail to upload.

rule: update enables the updating of an attribute and rule: insert restores normal multi-value behavior.

When we are trying to install the action, if there is no sources key it gives the DefinitionError.

If the action is already installed, when we are trying to install the action again without the sources key it gives the DefinitionError.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | feeds:

Phishlabs:

category: Commercial

namespace: threatq.connector.commercial.phishlabs.PhishLabs

description: "Retrieves data at PhishLabs and does stuff."

ingest_rules:

attributes:

- name: No Source Specified Example # Required

rule: update # Required

sources: Single Source # Required when feed_type is action

- name: Single Source Specified Example

rule: update # Enables single_value

sources: Single Source # Required when feed_type is action

- name: Multiple Sources Specified Example

sources:

- Single Source

- Another Source

rule: insert # Normal behavior

...

|

Default Statuses¶

The default_indicator_status key is used to set the default status for Indicator Threat Objects ingested by a feed.

Indicator statuses can be created by administrators via the ThreatQ UI under “System Configurations”. In addition to any

user-created statuses, the following values can be used:

ActivePoses a threat and is being exported to detection tools.

ExpiredNo longer poses a serious threat.

IndirectAssociated to an active indicator or event (i.e. pDNS).

ReviewRequires further analysis.

WhitelistedPoses no risk and should never be deployed.

The default_signature_status key is used to set the default status for Signature Threat Objects ingested by a feed.

The following values can be used:

ActiveCurrently poses a threat.

ExpiredNo longer poses a serious threat.

InactiveDoes not currently pose a threat, but could return to

Activestatus.Non-maliciousSignature does not describe a threat and therefore poses no risk.

ReviewRequires further analysis.

WhitelistedPoses no risk.

The default_indicator_status or default_signature_status keys are not required by feeds that do not ingest

Indicator or Signature Threat Objects, respectively.

Timestamp Formatting¶

Feed providers typically require that datetime parameters be formatted in a certain consistent way when passed in a

source request. In order to do this, one can provide a format string via the timestamp_format field. Additionally,

a timezone can be provided via the timezone field, if required by the format. An example of how these fields are set

in a feed can be found in the code-block below:

1 2 3 4 | feeds:

Example Feed:

timestamp_format: '%FT%H:%M:%S.%l%Z'

timezone: 'US/Eastern'

|

Setting a timestamp_format will automatically format the since, until, and started_at run_meta parameters only

within the feed’s source. If accessed via Jinja2 Expressions or Templates outside of a feed’s

source, the since, until, and started_at run_meta parameters are in the format YYYY-MM-DD HH:mm:ssZZ

where the default timezone is UTC (these are also the defaults in source if timestamp_format or timezone

are not explicitly provided).

A supplemental feed or action may define its own timestamp_format and timezone. This will format run_meta

source values for only that supplemental or action feed. This is new as of version 5.20.0. Previously, only a

primary feed could define these values and any supplemental feeds would inherit from it. This inheritance behavior still

exists. Actions would inherit from the primary as well, but there was no way to configure these values in the primary

before this change.

Note

For actions before version 5.20.0, users can modify run_meta values and pass them to actions via

run-params. For example, passing a run parameter like this:

epoch: !expr (run_meta.started_at|to_timestamp).format('X') | int, and referencing it in the invoked feed source

as run_params.epoch is equivalent to using timestamp_format: '%s' and referencing run_meta.started_at in

source in version 5.20.0 and later.

The timestamp_format values are formatted using Python’s strftime Format Codes, which themselves are largely derived from

the format codes required by the C89 language standard.

However, there is a special format string '%s' which formats the aforementioned parameters as integers instead of strings.

This integer represents the number of seconds that have elapsed since the Unix epoch.

The timezone value supports a variety of timezones and aliases. For example, America/New_York, US/Eastern,

EST/EDT (depending on time of year) are all equivalent. Reference the list of tz database time zones

for available options.

Warning

timestamp_format is not to be confused with the Timestamp Filter.

Feed Run Metadata¶

The following contextual information is provided to the Feed Definition and available for access via

Jinja2 Expressions or Templates. All contextual feed run data is provided via the run_meta object.

For example, to access a feed run’s started at time, one would write: !expr run_meta.started_at.

started_at- A string or integer representing the time that the current Feed Run actually started. This value is formatted per the feed’stimestamp_formatonly if accessed in the feed’ssource.since- A string or integer representing the start of the date range that is queried from the data source. This value is formatted per the feed’stimestamp_formatonly if accessed in the feed’ssource. Using this in a primary feed’ssourceenables support for manual runs. Does not need to be used along withuntil.until- A string or integer representing the end of the date range that is queried from the data source. This value is formatted per the feed’stimestamp_formatonly if accessed in the feed’ssource. Must be used along withsince.uuid- Each feed run gets a unique UUID to help distinguish it in the ThreatQ Appliance. This value is provided purely for informational purposes and is not generally used in a Feed Definition.trigger_type- A string that can either bemanualif the feed run was started by a user orscheduledif the feed run was started by the system during normal periodic feed processing. This value can be used to make adaptive queries based on how the run was triggered.

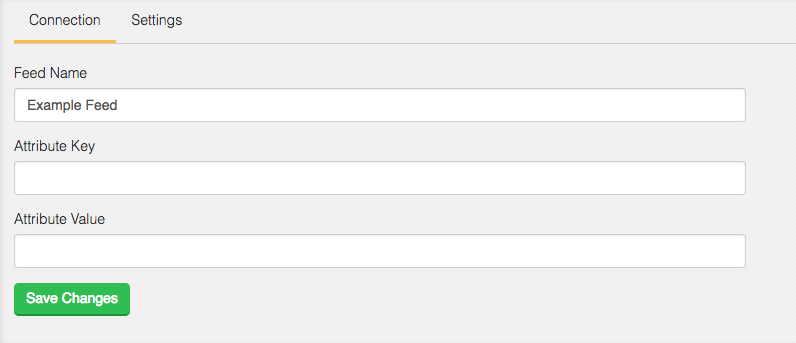

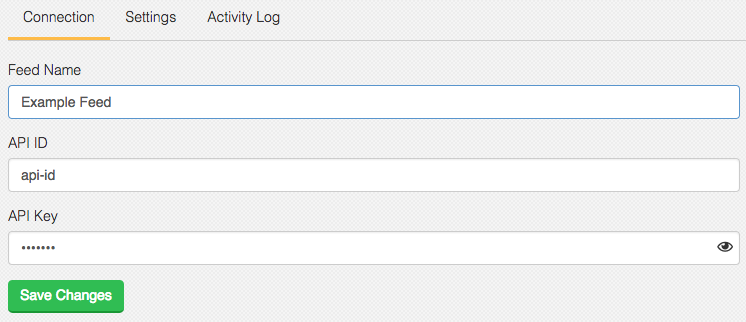

User Fields¶

CDFs have the capability to define user_fields. These fields are presented to users in the ThreatQ UI.

This allows feed designers to inject configuration options, credentials, or any other information that is needed

for a feed to operate. To learn more about the configuration options available when declaring user fields, see the

User Fields and Parameters page.

Feed Run Response¶

A CDF response is provided via the response object. For example, to access a CDF’s HTTP response header, one would

write !expr response.headers. At this time, only HTTP headers are supported in the response object.

headers- A dictionary representing the response’s HTTP headers. The values in this dictionary are dynamic, because different feeds can provide different header values. When accessing specific values in the dictionary, the mapping must exist or else an error will occur.

Feed Type¶

There are four different types of feeds that can be defined:

Primary (default)

Supplemental

Fulfillment

Action

A feed’s type is specified by the optional feed_type key. By default, the value of feed_type is None,

which implies that the feed is a primary feed.

Primary Feeds¶

1 2 3 4 5 6 7 | Formatted Feed Name:

source:

...

filters:

...

report:

...

|

If a feed’s type is not explicitly specified, then the feed is a primary feed. Primary feeds are listed in the ThreatQ UI’s

“My Integrations” page and are scheduled by the feed run scheduler when enabled. If the primary feed uses at least

run_meta.since (see Feed Run Metadata) in its source section, then manual runs are

supported. Users can kick off manual runs via the ThreatQ UI or CLI when the feed is enabled.

Note

The option to run a manual run may not be visible in the ThreatQ UI until a scheduled run is visible in the feed’s Activity Log.

At least one primary feed must be declared within a Feed Definition. There may be any number of primary feeds within a single Feed Definition.

A “connector” source is created for a primary feed when it is enabled for the first time. The source’s name matches the name of the primary feed. All objects ingested by the primary feed belong to this source.

Note

There is currently no way to overwrite or change the source of objects ingested by a feed.

Primary feeds are the only feed type that utilizes the report section.

Note

Advanced use case: One can specify the primary feed name as _default in the Feed Definition. If a feed’s

database record in the connectors table references a Feed Definition that makes use of _default, and this

Feed Definition does not contain a primary feed whose name matches the database record’s name, then the _default

feed is aliased under the database record’s feed name.

This is how STIX/TAXII feeds dynamically created via the ThreatQ UI work; they all reference the same Feed Definition

which has a _default primary feed.

Supplemental Feeds¶

1 2 3 4 5 6 | Formatted Feed Name:

feed_type: supplemental

source:

...

filters:

...

|

If a feed’s type is supplemental, then the feed is not listed in the ThreatQ UI and cannot be externally triggered.

Supplemental feeds can be invoked by any feed type (including other supplemental feeds) in the same Feed Definition.

The purpose of supplemental feeds is to make additional API requests to the feed provider or, really, any external

or internal resource. All values yielded from a supplemental feed’s Filter Chain are collected into a single list.

Note

While they cannot be avoided for use cases in which a single feed needs to make multiple requests, one should be cautious when using supplemental feeds. Since all values yielded from a supplemental feed’s Filter Chain are collected into a single list, there are two performance hits to take into account:

Memory consumption

Blocking the task of the calling feed that invoked the supplemental feed

In other words, a supplemental feed does not function like an asynchronous generator that has values yielded from its Filter Chain one-at-a-time. All the values need to be collected into memory, and subsequent tasks, which may belong to a stage or filter, will not execute until the supplemental feed is finished. However, other tasks running in parallel to the blocked task will not be affected (think of a supplemental feed being invoked for a single value yielded from the Iterate Filter; other yielded items may be at different stages of the Filter Chain or Feed Run Pipeline).

A general rule-of-thumb to keep in mind is to do the minimal amount of work necessary in a supplemental feed in order to get back to processing in the primary feed. For example, if a supplemental feed returns a JSON string containing a list of dictionary mappings, there is absolutely no reason to do further processing of the contents of this JSON string within the supplemental feed’s Filter Chain. Have the supplemental feed return either the JSON string or the parsed JSON, and do further transformations within the primary feed’s Filter Chain.

A common anti-pattern is to use the Iterate Filter at the end of a supplemental feed’s Filter Chain. This is simply wasting time and resources since each yielded item is collected back into a list anyway!

The primary way to invoke a supplemental feed is via the Set Filter in a feed’s Filter Chain. Supplemental feeds are also used for HTTP Token-based Authentication.

Regardless of invocation method, a dictionary mapping can be provided via the run-params argument. This mapping

can then be accessed within the supplemental feed’s definition by accessing run_params via

Jinja2 Expressions or Templates. To see an example use case for run-params,

click here.

Supplemental feeds inherit the template context of the calling feed, which means it has access to the following via Jinja2 Expressions or Templates:

run_meta(see Feed Run Metadata)

run_vars

user_fields(see User Fields)

As a result, any information needed from any of the above dictionary mappings does not need to be passed via run-params

since these dictionary mappings can be accessed directly.

A supplemental feed also inherits the timestamp_format value of the primary feed that invoked it (see Timestamp Formatting).

Fulfillment Feeds¶

1 2 3 4 5 6 | Formatted Feed Name:

feed_type: fulfillment

source:

...

filters:

...

|

If a feed’s type is fulfillment, then the feed is not listed in the UI and cannot be directly triggered.

A fulfillment feed run automatically starts at the end of a scheduled or manual primary feed run for any

primary feed(s) that exist(s) in the same Feed Definition.

The purpose of a fulfillment feed is to fulfill placeholder files ingested from attachment-sets in primary feed(s)

located in the same Feed Definition. A fulfillment feed is provided a list of placeholder filenames for files matching

the same source as the primary feed triggering the fulfillment feed run. This list of placeholder filenames is available via

run_params.placeholders in the fulfillment feed.

Note

When a primary feed ingests an attachment object without a content value, the attachment object

is ingested as a placeholder file. Placeholder files have their name modified prior to ingestion. A

placeholder file’s name, as stored in the ThreatQ Platform, is in the format:

pending-{{original_name}}.txt

However, the name available in the fulfillment feed’s run_params.placeholders list has the added

“pending-” and “.txt” removed.

So, for example, if a primary feed ingests a placeholder file whose name is my-malware-sample.tar.gz,

the stored placeholder file’s name is pending-my-malware-sample.tar.gz.txt. However, the name provided

by run_params.placeholders would be my-malware-sample.tar.gz.

Note

For clarity, run_params.placeholders is a list of strings representing each placeholder file’s

name. It is not a list of file (attachment) objects. Other attributes of the placeholder file, such as its

title, type, or mime_type, are not exposed to the fulfillment feed.

A fulfillment feed run will be provided all placeholder files matching the source of the primary feed regardless of which primary feed run ingested the placeholder file. Therefore, if a provider does not yet have file content for a placeholder file during one fulfillment feed run, it will be tried again in subsequent fulfillment feed runs.

Fulfillment feeds cannot be invoked by other feed types, but fulfillment feeds can invoke supplemental feeds. Fulfillment feeds typically depend on supplemental feeds for downloading a given file’s content. A fulfillment feed’s Filter Chain must yield a dictionary mapping containing attributes of a fulfilled file:

1 2 3 4 5 6 | - new:

name: str

title: str

type:

name: str # ThreatQ file type (dynamically created if it does not exist)

content: !expr value.content # downloaded file content (acceptable value types: aiohttp.StreamReader, str, bytearray, bytes)

|

Since the placeholder file object is not passed into the fulfillment feed for it to be mutated and then pushed back

into ThreatQ, the dictionary mapping yielded from the fulfillment feed’s Filter Chain needs to be associated back to

a placeholder file object. This association is done by attempting to match the yielded dictionary mapping’s

name or title against the regex ^{{name}}\b, where {{name}} is the original placeholder file’s name.

The yielded dictionary mapping can also contain attributes or tags to add to the fulfilled file object:

1 2 3 4 5 6 7 8 9 10 11 12 13 | - new:

# . . .

# (Required file fields from above snippet)

# . . .

tags:

- name: !expr value.tags # where `tags` is a list of strings

attributes: # or `!expr value.attributes`, where `attributes` is a list of mappings formatted like the following

- name: Attribute Name 1

value: !expr value.attribute1

published_at: !expr value.created_date # Optional

- name: Attribute Name 2

value: !expr value.attribute2

published_at: !expr value.created_date # Optional

|

A Feed Definition can contain at most one fulfillment feed. Fulfilled files maintain the same source as the primary feed that ingested its placeholder.

Fulfillment feeds (and attachments in general) can be difficult to use and work with. It is preferable to link back to the provider an an Attribute Value if the provider hosts the file, whether it be an analysis report, malware sample, sandbox execution result, etc. Creating a Report Threat Object is an advisable alternative to creating an Attachment Threat Object if the file is a report or results hosted by the provider.

Action Definitions¶

1 2 3 4 5 6 7 8 9 10 11 12 | Formatted Action Name:

feed_type: action

invoking_filter:

...

user_fields:

...

source:

...

filters:

...

report:

...

|

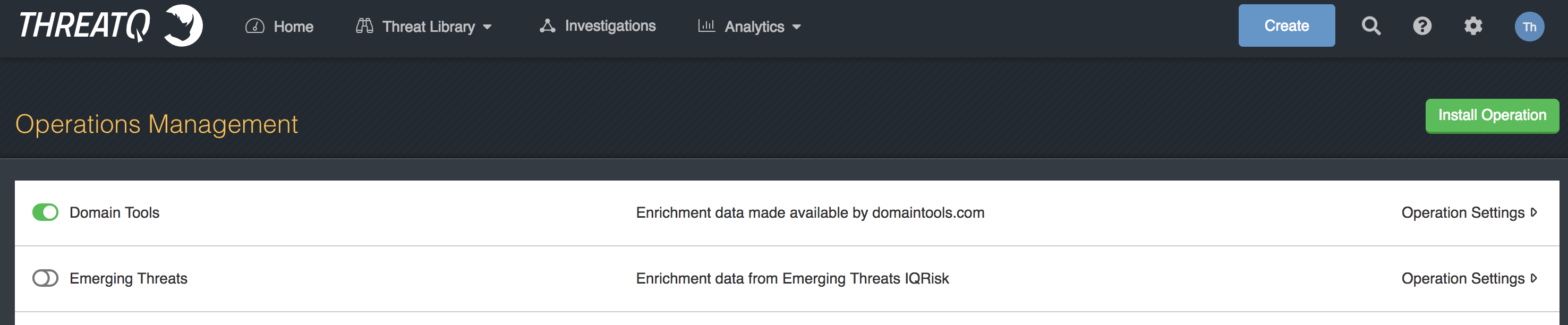

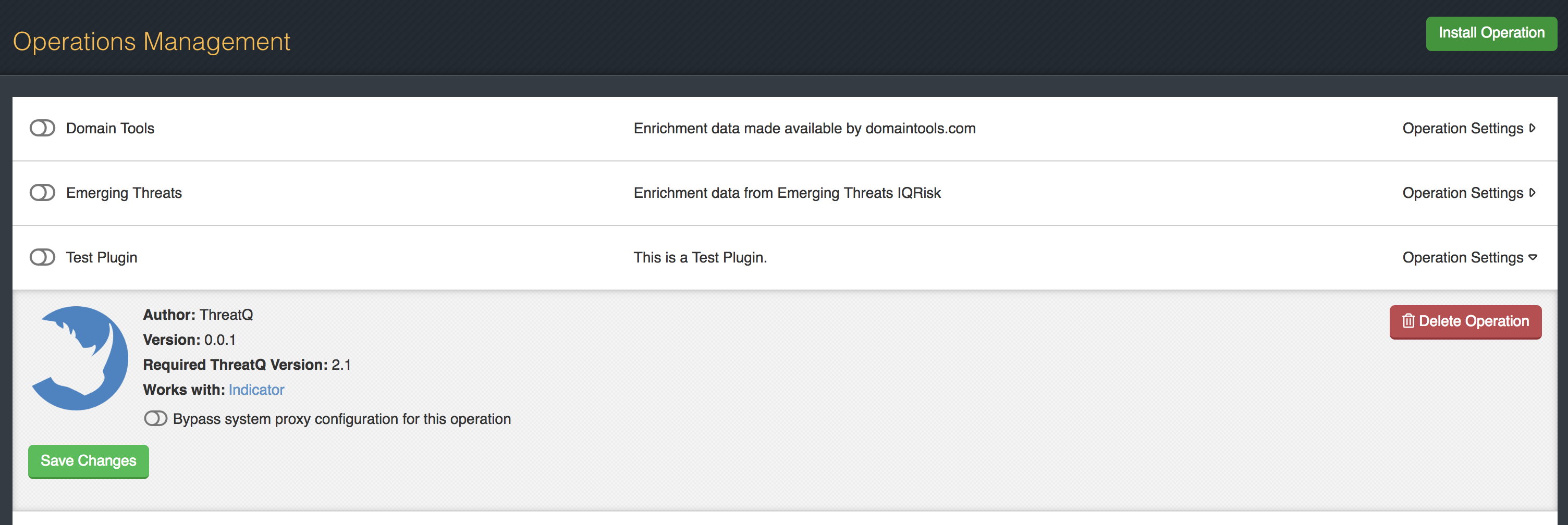

If a feed’s type is action, then the feed is not installed as an Integration in the ThreatQ platorm, but instead

an “Action” on the Orchestrator page of ThreatQ. An action is not executable by itself, but instead is executed

via the ThreatQ Orchestrator.

tq-feed run will execute an action definition and pass it run_params as defined in a run-params-file.

The Threat Objects are passed literally, and it is likely that an author would want to follow run_params matching

data that would be returned from the ThreatQ API from a threat-collection source.

An author has control over the Writing an invoking_filter, which is the filter that is executed when the action is invoked

from the ThreatQ Orchestrator. The invoking_filter is honored literally. Alternatively, an author can skip this and create a

config_options dictionary mapping with a supported_objects key and a list of Threat Object types as its value.

The config_options route will only create an invoking_filter for Indicator Threat Objects. If additional Threat

Object types are desired, the invoking_filter should be written by the Author.

Note

The invoking_filter is not executed when the action is executed via tq-feed run.

Note

An author should take care when writing an invoking_filter as to not disturb the integrity of the data

in the primary filter chain of the CDW it is finally inserted into when constructed by the ThreatQ Orchestrator.

An author should assume on writing of an action definition that the Threat Objects passed to it contain all of the data

returned for the object from the threat-collection source. For example, as of writing, tq-feed workflow is

configured to request only the fields type, source, and value. This became configurable in ThreatQ

Version 5.12.1 with the extension of config_options. See Action Configuration Options for more information.

The threat-collection feed source for the primary which an action is finally assembled into is currently configured as such:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | threat-collection:

collection_hash: <assigned_hash>

object_collections:

- indicators

object_fields_mapping:

indicators:

- value

- type

- sources

object_sort_mapping:

indicators:

- -updated_at

chunk_size: 100

objects_per_run: 123

since: !expr run_meta.since

until: !expr run_meta.until

|

The object_collections, object_fields_mapping, object_contexts_mapping, and object_sort_mapping,

are all configurable via specifying config_options in the Action definition.

In ThreatQ Versions 5.12.0 and below, the report of an action mapped its resulting data to a nested dictionary

matching the pattern: {<action_name>}_action. This was a slight departure from normal report behavior, and was

changed in ThreatQ Version 5.12.1 to match the expected behavior of regular feeds.

For a modern example, see Action Definition.

Action Configuration Options

Beginning in ThreatQ Version 5.12.1, an author may specify a config_options dictionary mapping in an action definition

as a means of configuring the Primary Feeds threat-collection source mappings. This modification incurs a consequence that

all actions within the final assembled workflow will be executed with the sum of threat-collection context derived from the

config_options mappings of all actions in the workflow.

The supported_objects mapping is required if there is no invoking_filter. For Indicators only, the invoking_filter

will be created by the ThreatQ Orchestrator if there is no invoking_filter specified. This has limited use and will likely

be deprecated in a future release. It is recommended that an author specify an invoking_filter.

An author may declare both an invoking_filter and supported_objects. In this case, the invoking_filter is

honored, but the supported objects field is rendered in the UI.

In order for an action to render the supported objects field in the UI, the author must include supported_objects.

The config_options may look like the following:

1 2 3 4 5 6 7 8 | feeds:

ActionFeed:

feed_type: action

config_options:

supported_objects:

- indicators:

- FQDN

- IP Address

|

The above example will create an invoking_filter which would be inserted into the final workflow as follows:

1 2 3 4 5 6 7 8 9 10 11 | - invoke-connector:

condition: !expr value.0.threatq_object_type in ["indicator"]

connector:

iterate: True

name: TestNoInvokingFilter

return: value

run-params: !expr value

to-stage: publish

filters:

- each:

- drop: !expr value.type not in ['IP Address', 'FQDN']

|

See Invoke Connector Filter for more specific implementation details.

Starting in ThreatQ Version 5.12.1, the config_options may also be used to configure the threat-collection source

mappings of the primary feed. The config_options may look like the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | feeds:

ActionFeed:

feed_type: action

config_options:

supported_objects:

- adversaries

object_fields_mapping:

adversaries:

- name

- sources

object_contexts_mapping:

adversaries:

- attributes

object_sort_mapping:

adversaries:

- -updated_at

|

The above example will create a threat-collection source mapping which would be inserted into the final workflow as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | threat-collection:

collection_hash: <assigned_hash>

object_collections:

- indicators

- adversaries

object_fields_mapping:

indicators:

- value

- type

- sources

adversaries:

- name

- sources

object_sort_mapping:

indicators:

- -updated_at

adversaries:

- -updated_at

chunk_size: 100

yield_chunk: True

objects_per_run: 123

since: !expr run_meta.since

until: !expr run_meta.until

|

Note that the new fields are in addition to the existing fields, and not a replacement. This means that the object_collections,

object_fields_mapping, object_contexts_mapping, and object_sort_mapping will be merged with the existing fields.

By default, Indicators are always included in the source.

Known Limitations

Since our executing environments are different in development versus in production, an author must be careful to ensure that the action definition is written in a production-friendly manner. Specifically, the keys contained in:

user_fields,template_values, and the name of the Action itself must be unique across all actions in ThreatQ.A Definition Yaml may not contain both Primary and Action definitions.

Note

This feature is in active development, and is subject to change. We intend to resolve many of the above limitations in the near future.

Source Definition¶

In a Feed Definition, the source is what specifies how to fetch data from a provider. It may, for instance, contain

the URL for an API along with authentication information for an endpoint.

See the CDF Sources page for a detailed list of all source types available for use in Feed Definitions complete with comprehensive examples.

Filter Chain¶

The Filter Chain defines how to manipulate feed data ahead of ingestion into ThreatQ. It follows the operations in the Source Definition, and precedes the Reporting stage.

See the CDF Filters page for a detailed list of all filters available for use in Feed Definitions complete with comprehensive examples.

Reporting¶

Reporting allows a feed definition writer to define how threat objects are created and related from the data output by the Filter Chain. For more information, see the CDF Reporting page.

CDF Sources¶

Sources allow a feed definition writer to specify how and where to download feed data from a provider. This section provides in-depth explanations of each source type available for use within a CDF Feed’s Source Definition.

File Source¶

Overview¶

The File Source is used to read a specified file from the filesystem. This source is often used for development and debugging, as a CDF writer can avoid making wasteful requests for each feed run. However, some feeds may require parsing files to execute.

If a CDF writer has a source which has unpredicatable intel, a static file can be modified

to easily test against edge cases.

Usage¶

1 2 3 4 | source:

file:

mode: rb #OPTIONAL - Read in Binary mode

file: /path/to/source_file.json

|

Note

When declaring a file path, the path should be absolute.

Examples¶

Suppose a CDF writer has a shared folder integrations with a json file

representing data they want to test against. They can use the static file like so:

1 2 | source:

file: /var/www/integrations/file.json

|

Suppose a CDF writer has a large file that requires being parsed to execute the Feed,

a CDF writer may want to use the file chunking feature, added in 5.4.0, to load the

file more efficiently by preserving memory space. They can do so like this, with

yield_chunk set to True (default False), chunk_size to be the approximate

size of each chunk of data being yielded to the API (default 1000), and record_responses

if the file chunks should be recorded (default False).

1 2 3 4 5 6 | source:

file:

file: /var/www/integrations/file.json

chunk_size: 9999

record_responses: False

yield_chunk: True

|

There can be some cases where we need not provide a file (simply nothing).

For this, we can also provide None or "None" in the value of file key. This simply returns and

can be used for saving the memory and also improving the performance of the Feed run.

1 2 | source:

file: None

|

OR

1 2 | source:

file: "None"

|

HTTP Source¶

Overview¶

The HTTP Source is used to query/poll threat intelligence data from a HTTP provider. This source type can be used with RESTful APIs or plain-text file reading. Basic usage of the HTTP Source is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | source:

http:

base_url: https://www.example.com/

url: feed

method: GET

params:

exampleA: 22

exampleB:

- 'A'

- 'B'

- 'C'

data:

exampleA: 42

exampleList:

- 1

- 2

- 3

headers:

Accepts: application/json

request_content_type: application/json

response_content_type: text/plain

auth:

...

pagination:

...

compress: None

chunked: 13107200

expect100: False

host_ca_certificate: |-

-----BEGIN CERTIFICATE-----

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

CCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCC

-----END CERTIFICATE-----

verify_host_ssl: True

disable_proxies: False

total_timeout: 120

status_code_handlers:

201: ignore

404:

fail: "we got a 404 here"

418: pass

403: pass_save

429:

attempts: 3 # Try a total of 3 times

delay: 5 # Wait 5 seconds after this status code is received

|

The following fields are available for use within a HTTP Source, most closely mapping to their respective parameters on

aiohttp.ClientSession.request():

base_url- Optional, String. When supplied, theurlfield is appended tobase_urlin order to calculate the final target URL to poll for feed data.url- Required, String. The target URL to poll for feed data.method- Optional, String. Defaults toGET. The HTTP method for this request. Accepts any HTTP method verb, notablyGET,POST,PUT, andDELETE.params- Optional, Mapping. Query string parameters to be sent along with the request. Parameters are expected as a simple mapping. Given a list value, the list is expanded such that there is a query string key/value pair for each item in the value list. The example above, for instance, senda a request to the final URL:https://www.example.com/feed?exampleA=22&exampleB=A&exampleB=B&exampleB=Cdata- Optional, Mapping. Data to be sent along via the request body. JSON body data is automatically encoded for the request by supplyingapplication/jsonto therequest_content_typefield.headers- Optional, Mapping. Simple mapping of header key/value pairs to be sent along with the request.request_content_type- Optional, String. Explicitly sets theContent-Typeheader of the HTTP request. Passingapplication/jsonas a value to this field automatically formats and encodes any data passed to thedatafield as JSON.response_content_type- Optional, String. Specifies the content type that Dynamo should read responses from this source as. Usually, providers specify the content type of a response appropriately via theContent-Typeresponse Header. Sometimes, however, providers do not specify the correctContent-Typewith the response, (ie. specifyingContent-Typeastext/htmlinstead oftext/plain). In these cases, aresponse_content_typedesignation is required in order to help Dynamo correctly deserialize data. Currently, HTTP Source supports the following content types:text/plainapplication/json

If none of the available content types can decode the response data or if Dynamo cannot determine the appropriate way to deserialize the response data, the response data is treated as binary file content and passed to the Filter Chain as a

aiohttp.StreamReader. TODO: See Attachment ingestion.auth- Optional, Mapping. Specifies the authentication object to be used when requesting data from the provider. See HTTP Source Authentication for more details and usage.pagination- Optional, Mapping. Specifies how to paginate large volumes of data returned by a provider. See HTTP Pagination for more details and usage.compress- Optional, Bool. Defaults toNone. Specifies whether the request should be compressed. PassingTrueto this field results inaiohttpcompressing the request data.chunked- Optional, Int. Defaults toNone. Specifies that the request should be sent as a chunked request, using the supplied Int value as the chunk size.expect100- Optional, Bool. Defaults toFalse. Specifies whether the request should expect a HTTP 100 continue response from the provider.host_ca_certificate- Optional, String. Defaults toNone. Specifies a base64 PEM encoded CA Certificate Bundle to verify the provider’s SSL certificate against. If not provided, the operating system’s default CA Certificate Bundle is used. Applicable only to https URLs.verify_host_ssl- Optional, Bool. Defaults toTrue. Specifies whether the provider’s certificate and hostname is verified for each request. Applicable only to https URLs.disable_proxies- Optional, Bool. Defaults toFalse. Specifies whether configured proxies should be ignored when making HTTP requests. New in version 4.31.0.total_timeout- Optional, Int. Defaults to119seconds. Specifies the total timeout of the HTTP request, including connection establishment, request sending, and response reading. New in version 4.36.0.status_code_handlers- Optional, Mapping. Specifies a mapping of HTTP status code to a handler action. By default,status_code_handlersis configured with204: ignore. To override this behavior, one can specify204: nullCurrently, the following handler actions are available:ignore- Denotes that a response of the specified status code should be ignored. Whatever value returned will not be forwarded along to the filter chain and the response will not be logged in a response log file. If specified,paginationwill still trigger after a response is ignored. New in version 4.34.0.fail- Denotes that a response of the specified status code should throw an user defined error. New in version 5.0.1.pass- Denotes that a response of the specified status code should be passed along to the filter chain, without saving the response to disk in the feeds activity directory. New in version 5.3.0.pass_save- Denotes that a response of the specified status code should be passed along to the filter chain and responses will be saved to disk in the feeds activity directory. New in version 5.3.0.delay- Denotes that a response of the specified status code should trigger a delay of any further handling of the request for a specified number of seconds. Useful in pagination and in combination withattemptsorignore. New in version 5.19.0.attempts- Denotes that a response of the specified status code should attempt the request up to the speficied number of times. Minimally, any request will make 1 attempt by default. New in version 5.19.0.

Handler assignments which take numbers as values are intended to be postive, and

attemptsmust be an integer. If invalid values are supplied, an error will be logged, and an attempt will be made to continue through the Feed Run with corrected values.

Examples¶

Simple HTTP GET¶

Many data sources exist as just an openly available plain-text file on a web server. Because this is so common, a shortcut to simplify these source definitions is available:

1 2 | source:

http: http://www.example.com/download_file.txt

|

This definition is the equivalent of:

1 2 3 | source:

http:

url: http://www.example.com/download_file.txt

|

HTTP Dynamic URL¶

Sometimes, a data source may have a different URL for different types of endpoints, so having the url value as an

expression may be helpful:

1 2 3 4 | source:

http:

base_url: http://www.example.com/

url: !expr 'historic.php' if run_meta.trigger_type == 'manual' else 'current.php'

|

This references the run_meta object to determine how this Feed Run was triggered. The final URL

is http://www.example.com/current.php if the current Feed Run is a normal, scheduled Hourly/Daily run. If the Feed

Run was triggered manually by a user, the URL is http://www.example.com/historic.php.

HTTP Pagination¶

Many APIs have so much information in a query result that it is unwieldy to return it all at once. In order to make this

data more digestible, providers employ some form of pagination. HTTP Pagination within CDF has been designed as a

largely configurable object nested within the http definition. The following shows a typical HTTP Pagination setup

wherein the provider expects the offset and limit query string parameters to paginate the response data:

1 2 3 4 5 6 7 8 9 10 11 | source:

http:

url: http://www.example.com/download_file.php

pagination:

template_values:

offset: 0

limit: 1000

condition: !expr prev_request_params.limit == (prev_response_data | length)

params:

offset: !expr "(prev_request_params.offset or 0) + 1"

limit: !expr limit

|

The following fields are available when writing a HTTP Pagination definition:

template_values- Optional, Mapping. Allows for declaration of constant default values for use specifically within the pagination definition. For more information on these see the Template Values section.condition- Required, Expression. The condition that is checked after each request to see if the HTTP source should continue requesting paginated data. As long asconditionresolves toTrue, paginated data continues to be requested. In the example above,conditionevaluates toTrueas long as the length of data from the previous response is equal to thelimitvalue.Note

Since the condition check is run after a request is sent and a response is received, the first request in a pagination supported HTTP source is always sent.

Note

When developing a Feed Definition, one can easily disable a HTTP Pagination by setting

conditionto!expr False.url- Optional, Expression. Allows pagination to dynamically change the URL. When supplied, anybase_urlsupplied to the HTTP Source definition containing the pagination in question is automatically included within HTTP Pagination and prepended tourlin the same manner as HTTP Source.params- Optional, Mapping. Query string parameters which pagination dynamically changes before each request by calculating the Jinja2 expressions supplied. The resulting dictionary of query string parameters calculated by pagination are used to update the query string request parameters declared within the HTTP Source definition. Thus, any query string parameters that are set within both the HTTP Source definition and the HTTP Pagination definition will have the values set by the HTTP Pagination definition.headers- Optional, Mapping. Supports the same functionality as theparamsfield, but for request headers.data- Optional, Mapping. Supports the same functionality as theparamsfield, but for request body data.

The following values are available via Jinja2 Expressions within a HTTP Pagination. These values are updated following each request sent/response received by the HTTP Source. Using these values, a Feed Definition writer can transform the request fields necessary for pagination:

prev_request_params- Mapping of the query string parameters that were used in the previous request. Note that the provided example looks at thelimitvalue in theconditionfield.prev_request_headers- Mapping of the headers that were used in the previous request.prev_request_data- Mapping of the body data that was sent in the previous request.prev_response_headers- Mapping of the headers that were sent by the provider with the previous response.prev_response_data- This is the raw data response (pre-filter chain) that came back from the data source. Note that in the provided example this is used in theconditionparameter in conjunction with Jinja2’slengthfilter.page_count- Int count of the number of pages that have been requested by the HTTP Pagination in question.page_countstarts at1and is incremented by1each time a paginated request is made.

HTTP Source Authentication¶

Overview¶

Some data sources also require authentication. Due to security, it is not recommended to just plainly include an

authentication header alongside the source definition. An auth section has been supplied with

the HTTP source configuration in order to encapsulate authentication logic and details.

Most authentication methods supply fields to specify required query string parameters or headers. Ultimately, the

system combines all of the headers or parameters supplied prior to sending them to the data provider. Any

information declared both inside and outside of the auth section is overwritten by the values found in the

auth section.

Simple Authentication¶

A simple auth declaration simply allows a Feed Definition writer to specify tokens required by a provider for authentication via either headers or query string parameters. The following demonstrates the usage of a simple auth within an HTTP Source definition.

1 2 3 4 5 6 7 8 9 | source:

http:

url: http://www.example.com/download_file.txt

auth:

simple:

headers:

X-Auth-Token: !expr "8675309EABCDEF"

params:

token: !expr "8675309EABCDEF"

|

Basic Authentication¶

A basic auth declaration uses the HTTP BASIC authentication standard to provide a username and password to the server. The following demonstrates the usage of basic auth within a HTTP Source definition.

1 2 3 4 5 6 7 8 | source:

http:

url: http://www.example.com/download_file.txt

auth:

basic:

username: my_username

password: my_password

encoding: utf8 # This is optional and defaults to 'latin1'

|

Client SSL Authentication¶

The Client SSL Auth declaration allows one to authenticate with a feed provider using client certificate authentication.

The following fields are available for use within a Client SSL Auth declaration:

client_certificate- Required, String. The client certificate in base64 PEM encoding.client_private_key- Optional, String. The private key in base64 PEM encoding for the associated client certificate, if applicable. If the private key is stored in the client certificate string, this field does not need to be used.client_private_key_passphrase- Optional, String. Password for decrypting the client private key.headers- Optional, Mapping. Headers that may also be needed for authentication.params- Optional, Mapping. Query string parameters that may also be needed for authentication.

The following demonstrates the usage of a Client SSL Auth within an HTTP Source definition:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | source:

http:

url: https://www.example.com/download_file.txt

auth:

client_ssl:

client_certificate: |-

-----BEGIN CERTIFICATE-----

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

CCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCC

-----END CERTIFICATE-----

client_private_key: |-

-----BEGIN RSA PRIVATE KEY-----

DDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDD

EEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEE

FFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF

-----END RSA PRIVATE KEY-----

client_private_key_passphrase: super secret

headers:

X-Auth-Token: 8675309EABCDEF

params:

token: 8675309EABCDEF

|

Multiple Authentication¶

The Multiple Authentication declaration allows one to aggregate multiple authentication methods in order to authenticate with a feed provider.

The following demonstrates the usage of a Multiple Authentication within an HTTP Source definition:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | source:

http:

url: https://www.example.com/download_file.txt

auth:

multi:

- basic:

username: my_username

password: my_password

- client_ssl:

client_certificate: |-

-----BEGIN CERTIFICATE-----

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

CCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCCC

-----END CERTIFICATE-----

client_private_key: |-

-----BEGIN RSA PRIVATE KEY-----

DDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDDD

EEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEE

FFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF

-----END RSA PRIVATE KEY-----

|

Token-based Authentication¶

Token-based Authentication allows a CDF writer to authenticate with services that use standards such as

OAuth2 or JWT, in cases where user interaction is not required. For OAuth2,

for instance, this means that only the Client Credential Grant and Password Grant flows are supported. The authentication

tokens are cached in memory to avoid unnecessary repetitive reauthentication between feed runs and across feeds. Note that

a cached token may be accessed by any Feed that references it with a matching token_identifier_set, as long as the token

is unexpired - so it is a best practice for a token_identifier_set to uniquely and securely identify a token.

Within the context of a CDF, a Supplemental Feed is used to define how to fetch and parse a response from an

authentication endpoint to obtain a token and, optionally, an expiration (which defaults to one hour). The expiration is used

to prevent making requests with an invalid token to lighten load for both the client and server alike - the cache automatically

reauthenticates with the provider when an expired token is requested by a feed run. After the supplemental feed run is complete,

the parent feed may use the token to authenticate its own request (for example, in a header, as shown in the example below) -

the token will be available in the Jinja2 context under the token variable, and may be referenced with a

Jinja2 Expression or Template within headers or params. An example of this behavior is shown below

in the !tmpl under the headers key.

New in version 4.41.0.

The following example demonstrates the usage of a Token-based Authentication within an HTTP Source definition:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | feeds:

Intelligence Provider - Bad Guys:

user_fields:

- username

- name: password

mask: True

source:

http:

url: https://api.intel-data-provider.com/intel/bad-guys

auth:

token:

headers:

Authorization: !tmpl 'Bearer: {{token}}'

reauthorize_error_codes:

- 401

- 403

- 500

token_feed:

name: Intelligence Provider - Authentication

token_identifier_set:

- !expr user_fields.username

- !expr user_fields.password

- 'any arbitrary string'

...

Intelligence Provider - Authentication:

feed_type: supplemental

source:

http:

url: https://api.intel-data-provider.com/auth/token

params:

grant_type: client_credentials

auth:

basic:

username: !expr user_fields.username

password: !expr user_fields.password

filters:

- parse-json

- get: token

|

The following fields are used under the token key, and are required unless otherwise noted:

token_feed- Mapping that denotes the following fields:name- Name of the Supplemental Feed to use to retrieve a token.run-params- Optional, defaults to None. Mapping of run-params to be sent to the token Supplemental Feed. Note that the user field values do not have to be passed asrun-params.